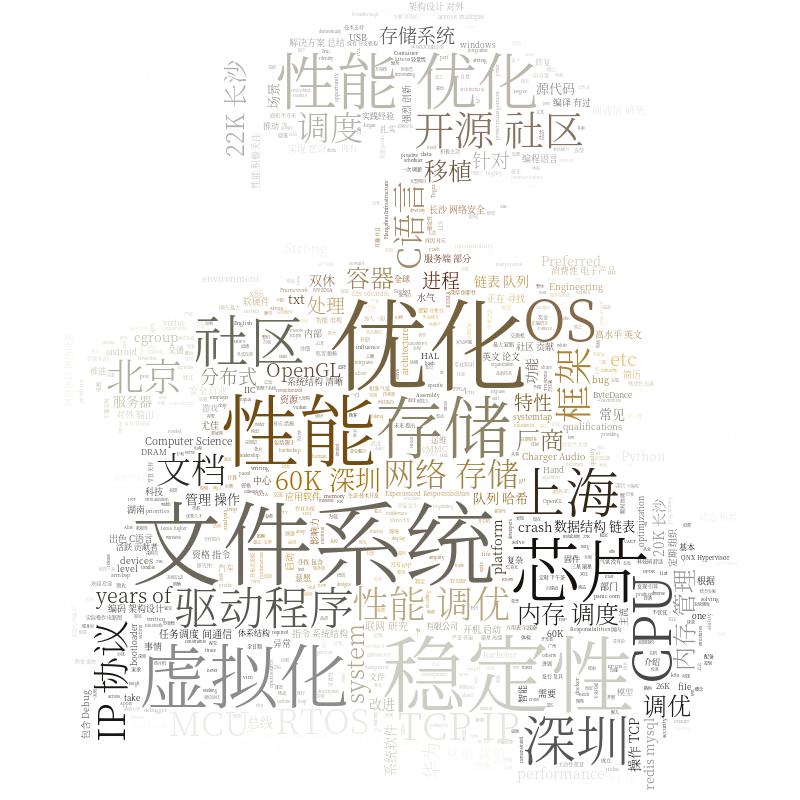

Highlight Job Description Keywords with WordCloud

Visual images are always more attractive than pure numbers or statistics, that’s why WordCloud are so popular, I am considering look for a new job, and gathered some vacancies with job descriptions. I want to know what parts they are most interest, so creating a WordCloud seems perfect for this purpose.

The following python packages are required for creating WordCloud:

pip3 install wordcloud

pip3 install imageio

pip3 install jieba

imageio for generating final image, jieba for Chinese segmentation.

You may want to use a mirror site to save download time, this can be done by either specify in command line:

pip3 install jieba -i https://pypi.tuna.tsinghua.edu.cn/simple

or configured in dot file:

cat << EOT >> $HOME/.pip/pip.conf

[global]

index-url = https://pypi.tuna.tsinghua.edu.cn/simple

EOT

The following script is based on example from official repository with slight adaptions, you need to download SourceHanSerifK-Light.otf and put the font file into /fonts/SourceHanSerif/ to make this work.

#!/usr/bin/env python

import jieba

import os

from os import path

from imageio import imread

from wordcloud import WordCloud, ImageColorGenerator

# Setting up parallel processes: 4

jieba.enable_parallel(4)

d= os.getcwd()

filterwords_path = d + '/filterwords.txt'

# Chinese fonts must be set

font_path = d + '/fonts/SourceHanSerif/SourceHanSerifK-Light.otf'

# the path to save worldcloud

imgname = d + '/jobs-masked.jpg'

# read the mask / color image taken from

back_coloring = imread(path.join(d, d + '/mask.jpg'))

# Read the whole text.

text = open(path.join(d, d + '/jd.txt')).read()

# The function for processing text with Jieba

def jieba_processing_txt(text):

mywordlist = []

seg_list = jieba.cut(text, cut_all=False)

liststr = "/ ".join(seg_list)

with open(filterwords_path, encoding='utf-8') as f_filter:

f_filter_text = f_filter.read()

f_filter_seg_list = f_filter_text.splitlines()

for myword in liststr.split('/'):

if not (myword.strip() in f_filter_seg_list) and len(myword.strip()) > 1:

mywordlist.append(myword)

return ' '.join(mywordlist)

wc = WordCloud(font_path=font_path, background_color="white", max_words=2000, mask=back_coloring,

max_font_size=100, random_state=42, width=1000, height=860, margin=2,)

wc.generate(jieba_processing_txt(text))

# create coloring from image

image_colors_byImg = ImageColorGenerator(back_coloring)

wc.recolor(color_func=image_colors_byImg)

# save wordcloud

wc.to_file(path.join(d, imgname))