Reading Notes on BPF Performance Tools

- Ch 1 Introduction

- Ch 2 Technology Background

- Ch 3 Performance Analysis

- Ch 4 BCC

- Ch 5 bpftrace

- Ch 6 CPUs

- Ch 7 Memory

- Ch 8 File Systems

- Ch 9 Disk I/O

- Ch 13 Applications

- Ch 14 Kernel

- Ch 18 Tips, Tricks and Common Problems

Chapter 3. Performance Analysis

This chapter is a crash course in performance analysis.

3.1 OVERVIEW

3.1.1 Goals

- Latency: How long to accomplish a request or operation, typically measured in milliseconds.

- Rate: An operation or request rate per second.

- Throughput: Typically data movement in bits or bytes per second.

- Utilization: How busy a resource is over time as a percentage.

- Cost: The price/performance ratio.

3.1.2 Activities

| Performance Activity | BPF Performance Tools | |

|---|---|---|

| 1 | Performance characterization of prototype software or hardware | To measure latency histograms under different workloads. |

| 2 | Performance analysis of development code, pre-integration | To solve performance bottlenecks, and find general performance improvements. |

| 3 | Perform no-regression testing of software builds, pre- or post-release | To record code usage and latency from different sources, allowing faster resolution of regressions. |

| 4 | Benchmarking/benchmarketing for software releases | To study performance to find opportunities to improve benchmark numbers. |

| 5 | Proof-of-concept testing in the target environment | To generate latency histograms to ensure that performance meets request latency service level agreements. |

| 6 | Monitoring of running production software | For creating tools that can run 24x7 to expose new metrics that would otherwise be blind spots. |

| 7 | Performance analysis of issues | To solve a given performance issue with tools and custom instrumentation as needed. |

3.2 PERFORMANCE METHODOLOGIES

A methodology is a process you can follow, which provides a starting point, steps, and an ending point.

3.2.1 Workload Characterization

Suggested steps for performing workload characterization:

- Who is causing the load? (PID, process name, UID, IP address, …)

- Why is the load called? (code path, stack trace, flame graph)

- What is the load? (IOPS, throughput, type)

- How is the load changing over time? (per-interval summaries)

3.2.2 Drill-Down Analysis

3.2.3 USE Method

For every resource, check:

- Utilization

- Saturation

- Errors

3.2.4 Checklists

3.3 LINUX 60-SECOND ANALYSIS

uptimedmesg | tailvmstat 1mpstat -P ALL 1pidstat 1iostat -xz 1free -msar -n DEV 1sar -n TCP,ETCP 1top

These commands may enable you to solve some performance issues outright.

-

uptime

A high 15-minute load average coupled with a low 1-minute load average can be a sign that you logged in too late to catch the issue. -

dmesg | tail vmstat 1# vmstat 1 -w procs -----------------------memory---------------------- ---swap-- -----io---- -system-- --------cpu-------- r b swpd free buff cache si so bi bo in cs us sy id wa st 0 0 0 325288 90884 1146872 0 0 1 1 5 5 0 0 99 0 0 0 0 0 325288 90884 1146872 0 0 0 0 309 257 0 0 100 0 0 0 0 0 325288 90884 1146872 0 0 0 0 296 264 0 0 100 0 0 0 0 0 325164 90884 1146872 0 0 0 0 277 261 0 0 100 0 0 0 0 0 325164 90884 1146872 0 0 0 0 301 251 0 0 100 0 0 0 0 0 325164 90884 1146872 0 0 0 0 285 260 0 0 100 0 0- r: To interpret: an “r” value greater than the CPU count is saturation.

- si, so: Swap-ins and swap-outs. If these are non-zero, you’re out of memory.

mpstat -P ALL 1$ mpstat -P ALL 1 [...] 03:16:41 AM CPU %usr %nice %sys %iowait %irq %soft %steal %guest %gnice %idle 03:16:42 AM all 14.27 0.00 0.75 0.44 0.00 0.00 0.06 0.00 0.00 84.48 03:16:42 AM 0 100.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 [...]CPU 0 has hit 100% user time, evidence of a single thread bottleneck. Also look out for high %iowait time, which can be then explored with disk I/O tools, and high %sys time, which can then be explored with syscall and kernel tracing, and CPU profiling.

-

pidstat 1

pidstat provides rolling output by default, so that variation over time can be seen. iostat -xz 1$ iostat -xz 1 Linux 4.13.0-19-generic (...) 08/04/2018 _x86_64_ (16 CPU) avg-cpu: %user %nice %system %iowait %steal %idle 0.19 0.00 0.31 0.02 0.00 99.48 22.90 0.00 0.82 0.63 0.06 75.59 Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util nvme0n1 0.00 1167.00 0.00 1220.00 0.00 151293.00 248.02 2.10 1.72 0.00 1.72 0.21 26.00 nvme1n1 0.00 1164.00 0.00 1219.00 0.00 151384.00 248.37 0.90 0.74 0.00 0.74 0.19 23.60 md0 0.00 0.00 0.00 4770.00 0.00 303113.00 127.09 0.00 0.00 0.00 0.00 0.00 0.00 [...]- r/s, w/s, rkB/s, wkB/s: These are the delivered reads, writes, read Kbytes, and write Kbytes per second to the device. Use these for workload characterization. A performance problem may simply be due to an excessive load applied.

- await: The average time for the I/O in milliseconds. This is the time that the application suffers, as it includes both time queued and time being serviced. Larger than expected average times can be an indicator of device saturation, or device problems.

- avgqu-sz: The average number of requests issued to the device. Values greater than one can be evidence of saturation (although devices, especially virtual devices which front multiple back-end disks, can typically operate on requests in parallel.)

- %util: Device utilization. This is really a busy percent, showing the time each second that the device was doing work. It does not show utilization in a capacity planning sense, as devices can operate on requests in parallel. Values greater than 60% typically lead to poor performance (which should be seen in the await column), although it depends on the device. Values close to 100% usually indicate saturation.

-

free -m

Check that the available value is not near zero: it shows how much real memory is available in the system, including in the buffer and page. sar -n DEV 1sar -n DEV 1 Linux 5.7.8+ (arm-64) 08/29/2020 _aarch64_ (8 CPU) 01:46:42 PM IFACE rxpck/s txpck/s rxkB/s txkB/s rxcmp/s txcmp/s rxmcst/s %ifutil 01:46:43 PM lo 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 01:46:43 PM eth0 2.00 2.00 0.15 0.24 0.00 0.00 0.00 0.00-ncan be used to look at network device metrics. Check interface throughput rxkB/s and txkB/s to see if any limit may have been reached.sar -n TCP,ETCP 1# sar -n TCP,ETCP 1 Linux 5.7.8+ (arm-64) 08/29/2020 _aarch64_ (8 CPU) 01:51:53 PM active/s passive/s iseg/s oseg/s 01:51:54 PM 0.00 0.00 1.00 1.00 01:51:53 PM atmptf/s estres/s retrans/s isegerr/s orsts/s 01:51:54 PM 0.00 0.00 0.00 0.00 0.00 01:51:54 PM active/s passive/s iseg/s oseg/s 01:51:55 PM 0.00 0.00 3.00 3.00 01:51:54 PM atmptf/s estres/s retrans/s isegerr/s orsts/s 01:51:55 PM 0.00 0.00 0.00 0.00 0.00Now we’re using sar to look at TCP metrics and TCP errors. Columns to check:

- active/s: Number of locally-initiated TCP connections per second (e.g., via connect()).

- passive/s: Number of remotely-initiated TCP connections per second (e.g., via accept()).

- retrans/s: Number of TCP retransmits per second.

Active and passive connection counts are useful for workload characterization. Retransmits are a sign of a network or remote host issue.

top

3.4 BCC TOOL CHECKLIST

- execsnoop

- opensnoop

- ext4slower (or btrfs*, xfs*, zfs*)

- biolatency

- biosnoop

- cachestat

- tcpconnect

- tcpaccept

- tcpretrans

- runqlat

runqlat times how long threads were waiting for their turn on CPU. - profile

profile is a CPU profiler, a tool to understand which code paths are consuming CPU resources.

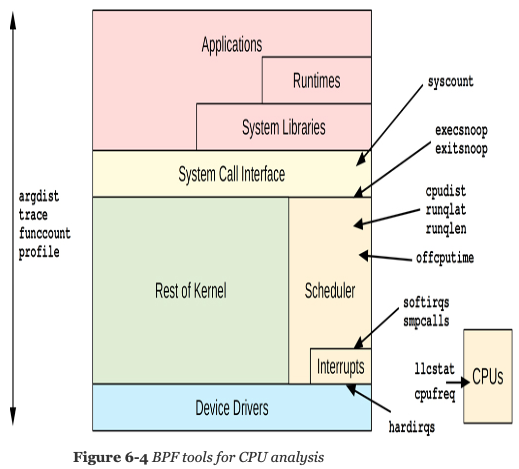

Chapter 6. CPUs

Learning objectives:

- Understand CPU modes, the behavior of the CPU scheduler, and CPU caches.

- Understand areas for CPU scheduler, usage, and hardware analysis with BPF.

- Learn a strategy for successful analysis of CPU performance.

- Solve issues of short-lived processes consuming CPU resources.

- Discover and quantify issues of run queue latency.

- Determine CPU usage through profiled stack traces, and function counts.

- Determine reasons why threads block and leave the CPU.

- Understand system CPU time by tracing syscalls.

- Investigate CPU consumption by soft and hard interrupts.

- Use bpftrace one-liners to explore CPU usage in custom ways.

6.1 BACKGROUND

6.1.1 CPU Modes

- system mode

- user mode

6.1.2 CPU Scheduler

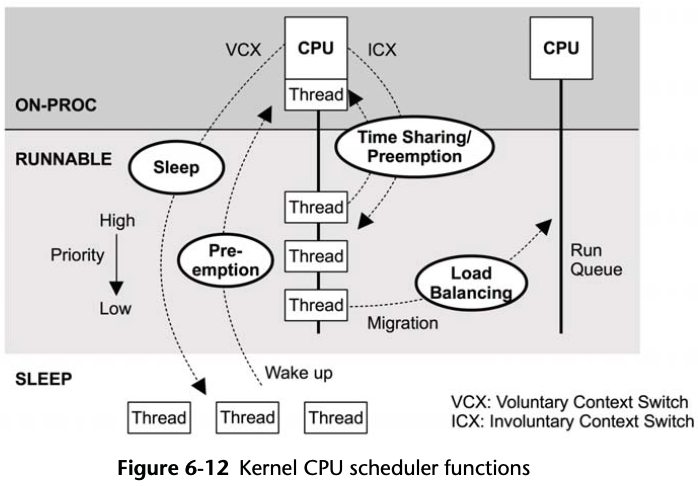

Figure 6-1 CPU scheduler

CFS scheduler uses a red-black tree of future task execution.

Threads leave the CPU in one of two ways:

- voluntary, if they block on I/O, a lock, or a sleep;

- involuntary, if they have exceeded their scheduled allocation of CPU time and are descheduled so that other threads can run, or if they are pre-empted by a higher-priority thread. When a CPU switches from running one thread to another, it switches address spaces and other metadata: this is called a context switch.

Thread migrations: If a thread is in the runnable state and sitting in a run queue while another CPU is idle, the scheduler may migrate the thread to the idle CPU’s run queue so that it can execute sooner.

As a performance optimization, the scheduler implements CPU affinity: the scheduler only migrates threads after they have exceeded a wait time threshold, so that if their wait was short they avoid migration and instead run again on the same CPU where the hardware caches should still be warm.

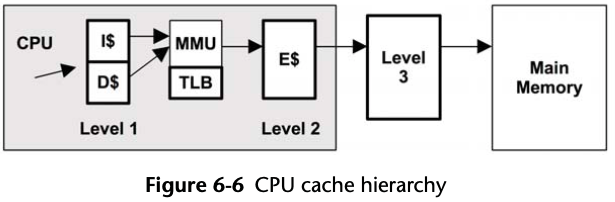

6.1.3 CPU Caches

Figure 6-2 hardware caches.

L1 cache, which is split into separate instruction (I$) and data (D$) caches, and is also small (Kbytes) and fast (nanoseconds).

The caches end with the last level cache (LLC), which is large (Mbytes) and much slower.

The memory management unit (MMU) responsible for translating virtual to physical addresses also has its own cache, the translation lookaside buffer (TLB).

6.1.4 BPF Capabilities

- What new processes are created? What is their lifespan?

- Why is system time high? Is it syscalls? What are they doing?

- How long do threads spend on-CPU for each wakeup?

- How long do threads spend waiting on the run queues?

- What is the maximum length of the run queues?

- Are the run queues balanced across the CPUs?

- Why are threads voluntarily leaving the CPU? For how long?

- What soft and hard IRQs are consuming CPUs?

- How often are CPUs idle when work is available on other run queues?

- What is the LLC hit ratio by application request?

Event Sources

| Event Type | Event Source |

|---|---|

| Kernel functions | kpreobes,kretprobes |

| User-level functions | uprobes, uretprobes |

| System calls | syscall tracepoints |

| Soft interrupts | irq:softirq* tracepoints |

| Hard interrupts | irq:irq_handler* tracepoints |

| Workqueue events | workqueue tracepoints |

| Timed sampling | PMC- or time-based sampling |

| CPU power events | power tracepoints |

| CPU cycles | PMCs |

Overhead

In the worst case, scheduler tracing can add over 10% overhead to a system.

- Infrequent events, such as process execution and thread migrations (with at most thousands of events per second) can be instrumented with negligible overhead.

- Profiling (timed sampling) also limits overhead to the fixed rate of samples, reducing overhead to negligible proportions.

6.1.5 Strategy

- Ensure that a CPU workload is running before you spend time with analysis tools. Check system CPU utilization (eg, using mpstat) and that all the CPUs are still online (and some haven’t been offlined for whatever reason).

- Confirm that the workload is CPU-bound.

- Look for high CPU utilization system-wide or on a single CPU (e.g., using mpstat).

- Look for high run queue latency (e.g., using BCC runqlat). Software limits such as cgroups can artificially limit the CPU available to processes, so an application may be CPU-bound on a mostly idle system. This counter-intuitive scenario can be revealed by studying run queue latency.

- Quantify CPU usage as percent utilization system wide, then broken down by process,

CPU mode, and CPU ID. This can be done using traditional tools (e.g., mpstat,

top). Look for high utilization by a single process, mode, or CPU.

- For high system time, frequency count system calls by process and call type, and also examine arguments to look for inefficiencies (eg, using bpftrace one-liners, perf, BCC sysstat).

- Use a profiler to sample stack traces, which can be visualized as a CPU flame graph. Many CPU issues can be found by browsing such flame graphs.

- For CPU consumers identified by profilers, consider writing custom tools to show

more context. Profilers show the functions that are running, but not the arguments

and objects they are operating on, which may be needed to understand CPU usage.

Examples:

- Kernel mode: if a file system is CPU busy doing stat() on files, what are their file names? (e.g., using BCC statsnoop, or in general using tracepoints or kprobes from BPF tools).

- User-mode: If an application is busy processing requests, what are the requests? (e.g., if an application-specific tool is unavailable, one could be developed using USDT or uprobes and BPF tools).

- Measure time in hardware interrupts, since this time may not be visible in timer-based profilers (e.g., BCC hardirqs).

- Browse and execute the BPF tools listed in the BPF tools section of this book.

- Measure CPU instructions-per-cycle (IPC) using PMCs to explain at a high level how much the CPUs are stalled (e.g., using perf). This can be explored with more PMCs, which may identify low cache hit ratios (e.g., BCC llcstat), temperature stalls, etc.

6.2 TRADITIONAL TOOLS

Table of traditional tools

| Tool | Type | Description |

|---|---|---|

| uptime | Kernel Statistics | Show load averages and system uptime |

| top | Kernel Statistics | Show CPU tie by process, and CPU mode times system wide |

| mpstat | Kernel Statistics | Show CPU mode time by CPU |

| perf | Kernel Statistics, Hardware Statistics, Event Tracing | A multi-tool with many subcommands. Profile (timed sampling) of stack traces and event statistics and tracing of PMCs, tracepoints, USDT, kprobes, and uprobes. |

| Ftrace | Kernel Statistics, Event Tracing | Kernel function count statistics, and event tracing of kprobes and uprobes |

6.2.1 Kernel Statistics

Load Averages

$ uptime

00:34:10 up 6:29, 1 user, load average: 20.29, 18.90, 18.70

The load averages are not simple averages (means), but are exponentially damped moving sums, and reflect time beyond one, five, and 15 minutes. The metrics that these summarize show demand on the system: tasks in the CPU runnable state, as well as tasks in the uninterruptible wait state. If you assume that the load averages are showing CPU load, you can divide them by the CPU count to see whether the system is running at CPU saturation – this would be indicated by a ratio of over 1.0. However, a number of problems with load averages, including their inclusion of uninterruptible tasks (those blocked in disk I/O and locks) cast doubt on this interpretation, so they are only really useful for looking at trends over time. You must use other tools, such as the BPF-based offcputime, to see if the load is CPU- or uninterruptible-time based.

top

Tools such as pidstat(1) can be used to print rolling output of process CPU usage

for this purpose, as well as monitoring systems that record process usage.

tiptop which sources PMCs, atop which uses process events to display short-lived processes, and the biotop and tcptop tools I developed which use BPF.

mpstat

This can be used to identify issues of balance, where some CPUs have high utilization

while others are idle.

This can occur for a number of reasons, such as:

- applications misconfigured with a thread pool size too small to utilize all CPUs

- software limits that limit a process or container to a subset of CPUs

- software bugs

6.2.2 Hardware Statistics

perf

The perf stat command counts events specified with -e arguments. If no arguments

are supplied, it defaults to a basic set of PMCs, or an extended set if -d is used.

Depending on your processor type and perf version, you may find a detailed list of PMCs shown using perf list.

There are hundreds of PMCs available, together with model specific registers (MSRs) you can determine how CPU internal components are performing:

- the current clock rate of the CPUs

- their temperatures and energy consumption

- the throughput on CPU interconnects and memory busses

- and more.

6.2.3 Hardware Sampling

6.2.4 Timed Sampling

These provide a coarse, cheap-to-collect view of which software is consuming CPU resources: the sample rate can be tuned to be negligible. There are also different types of profilers, some operating in user-mode only and some in kernel-mode. Kernel-mode profilers are usually preferred, as they can capture both kernel- and user-level stacks, providing a more complete picture.

perf

# echo 0 > /proc/sys/kernel/kptr_restrict

# perf record -F 99 -a -g -- sleep 10

[ perf record: Woken up 1 times to write data ]

[ perf record: Captured and wrote 0.883 MB perf.data (7192 samples) ]

# perf report -n --stdio

99 Hertz was chosen instead of 100 to avoid lockstep sampling with other software routines, which would otherwise skew the samples.

Roughly 100 was chosen instead of say 10 or 10,000 as a balance between detail and overhead: too low, and you don’t get enough samples to see the full picture of execution, including large and small code paths; too high, and the overhead of samples skews performance and results.

CPU Flame Graphs

git clone https://github.com/brendangregg/FlameGraph

# cd FlameGraph

# perf record -F 49 -ag -- sleep 30

# perf script --header | ./stackcollapse-perf.pl | ./flamegraph.pl > flame1.svg

At Netflix we have developed a newer flame graph implementation using d3, and an additional tool that can read perf script output: FlameScope, which visualizes profiles as subsecond offset heatmap, from which time ranges can be selected to see the flame graph.

Internals

6.2.5 Event Statistics and Tracing

perf

perf can instrument tracepoints, kprobes, uprobes, and (as of recently) USDT as

well.

As an example, consider an issue where system-wide CPU utilization is high, but

there is no visible process responsible in top. The issue could be short-lived

processes.

To test this hypothesis, count the sched_process_exec tracepoint system-wide using

perf script to show the rate of exec() family syscalls:

# perf stat -e sched:sched_process_exec -I 1000

# time counts unit events

1.000258841 169 sched:sched_process_exec

2.000550707 168 sched:sched_process_exec

3.000676643 167 sched:sched_process_exec

4.000880905 167 sched:sched_process_exec

[...]

# an example of idle system

# perf stat -e sched:sched_process_exec -I 1000

# time counts unit events

1.001371124 0 sched:sched_process_exec

2.002853416 0 sched:sched_process_exec

3.004027371 0 sched:sched_process_exec

4.005679831 1 sched:sched_process_exec

# perf record -e sched:sched_process_exec -a

# perf script

perf has a special subcommand for CPU scheduler analysis called perf sched.

It uses a dump-and-post-process approach for analyzing scheduler behavior, and

provides various reports that can show the CPU runtime per wakeup, the average

and maximum scheduler latency (delay), and ASCII visualizations to show thread

execution per-CPU and migrations.

# perf sched record -- sleep 1

# perf sched timehist

Samples do not have callchains.

time cpu task name wait time sch delay run time

[tid/pid] (msec) (msec) (msec)

--------------- ------ ------------------------------ --------- --------- ---------

888653.810953 [0000] perf_5.7[274586] 0.000 0.000 0.000

888653.811013 [0000] migration/0[11] 0.000 0.011 0.059

888653.811146 [0001] perf_5.7[274586] 0.000 0.000 0.000

888653.811180 [0001] migration/1[15] 0.000 0.007 0.033

However, the dump-and-post-process style is costly.

Where possible, use BPF instead as it can do in-kernel summaries that answer similar questions, and emit the results (for example, the runqlat and runqlen tools).

Ftrace

perf-tools collection mostly uses Ftrace for instrumentation.

Function calls consume CPU, and their names often provide clues as to the workload performed.

6.3 BPF TOOLS

Table of CPU-related tools

| Tool | Source | Target | Overhead | Description |

|---|---|---|---|---|

| execsnoop | bcc/bt | Sched | negligible | List new process execution |

| exitsnoop | bcc | Sched | negligible | Show process lifespan and exit reason |

| runqlat | bcc/bt | Sched | noticeable | Summarize CPU run queue latency |

| runqlen | bcc/bt | Sched | negligible | Summarize CPU run queue length |

| runqslower | bcc | Sched | noticeable* | Print run queue waits slower than a threshold |

| cpudist | bcc | Sched | significant | Summarize on-CPU time |

| cpufreq | book | CPUs | negligible | Sample CPU frequency by process |

| profile | bcc | CPUs | negligible | Sample CPU stack traces |

| offcputime | bcc/book | Sched | significant | Summarize off-CPU stack traces and times |

| syscount | bcc/bt | Syscalls | noticeable* | Count system calls by type and process |

| argdist | bcc | Syscalls | Can be used for syscall analysis | |

| trace | bcc | Syscalls | Can be used for syscall analysis | |

| funccount | bcc | Software | significant* | Count function calls |

| softirqs | bcc | Interrupts | high* | Summarize soft interrupt time |

| hardirqs | bcc | Interrupts | Summarize hard interrupt time | |

| smpcalls | book | Kernel | Time SMP remote CPU calls | |

| llcstat | bcc | PMCs | Summarize LLC hit ratio by process |

6.3.1 execsnoop

It can find issues of short-lived processes that consume CPU resources and can also be used to debug software execution, including application start scripts.

execsnoop(8) has been used to debug many production issues: perturbations from background jobs, slow or failing application startup, slow or failing container startup, etc.

Some applications create new processes without calling exec(2), for example, when creating a pool of worker processes using fork(2) or clone(2) alone.

This situation should be uncommon: applications should be creating pools of worker threads, not processes.

Since the rate of process execution is expected to be relatively low (<1000/sec), the overhead of this tool is expected to be negligible.

execsnoop.bt

#!/usr/bin/env bpftrace

BEGIN

{

printf("%-10s %-5s %s\n", "TIME(ms)", "PID", "ARGS");

}

tracepoint:syscalls:sys_enter_execve

{

printf("%-10u %-5d ", elapsed / 1000000, pid);

join(args->argv);

}

6.3.2 exitsnoop

exitsnoop(8) traces when processes exit, showing their age and exit reason. The age is the time from process creation to termination, and includes time both on and off CPU.

# exitsnoop-bpfcc

PCOMM PID PPID TID AGE(s) EXIT_CODE

agetty 275283 1 275283 10.02 code 1

agetty 275284 1 275284 10.03 code 1

agetty 275285 1 275285 10.03 code 1

agetty 275286 1 275286 10.03 code 1

agetty 275287 1 275287 10.03 code 1

This works by instrumenting the sched:sched_process_exit tracepoint and its

arguments, and also uses bpf_get_current_task() so that the start time can be

read from the task struct (an unstable interface detail).

Since this tracepoint should fire infrequently, the overhead of this tool should be negligible.

6.3.3 runqlat

runqlat is a tool to measure CPU scheduler latency. This is useful for identifying and quantifying issues of CPU saturation, where there is more demand for CPU resources than they can service. The metric measured by runqlat is the time each thread (task) spends waiting for its turn on CPU.

# runqlat-bpfcc 10 1

Tracing run queue latency... Hit Ctrl-C to end.

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 66 |*** |

8 -> 15 : 31 |* |

16 -> 31 : 704 |****************************************|

32 -> 63 : 507 |**************************** |

64 -> 127 : 66 |*** |

128 -> 255 : 8 | |

256 -> 511 : 7 | |

512 -> 1023 : 2 | |

1024 -> 2047 : 0 | |

2048 -> 4095 : 2 | |

4096 -> 8191 : 1 | |

runqlat(8) works by instrumenting scheduler wakeup and context switch events to determine the time from wakeup to running. These events can be very frequent on busy production systems, exceeding one million events per second. Even though BPF is optimized, at these rates even adding one microsecond per event can cause noticeable overhead .

Misconfigured Build

Here is a different example for comparison, this time a 36-CPU build server doing

a software build, where the number of parallel jobs has been set to 72 by mistake,

causing the CPUs to be overloaded:

# runqlat 10 1

Tracing run queue latency... Hit Ctrl-C to end.

usecs : count distribution

0 -> 1 : 1906 |*** |

2 -> 3 : 22087 |****************************************|

4 -> 7 : 21245 |************************************** |

8 -> 15 : 7333 |************* |

16 -> 31 : 4902 |******** |

32 -> 63 : 6002 |********** |

64 -> 127 : 7370 |************* |

128 -> 255 : 13001 |*********************** |

256 -> 511 : 4823 |******** |

512 -> 1023 : 1519 |** |

1024 -> 2047 : 3682 |****** |

2048 -> 4095 : 3170 |***** |

4096 -> 8191 : 5759 |********** |

8192 -> 16383 : 14549 |************************** |

16384 -> 32767 : 5589 |********** |

32768 -> 65535 : 372 | |

65536 -> 131071 : 10 | |

The distribution is now bi-modal, with a second mode centered in the eight to 16 millisecond bucket. This shows significant waiting by threads.

This particular issue is straightforward to identify from other tools and metrics. For example, sar can show CPU utilization (-u) and run queue metrics (-q):

# sar -uq 1

Linux 4.18.0-virtual (...) 01/21/2019 _x86_64_ (36 CPU)

11:06:25 PM CPU %user %nice %system %iowait %steal %idle

11:06:26 PM all 88.06 0.00 11.94 0.00 0.00 0.00

11:06:25 PM runq-sz plist-sz ldavg-1 ldavg-5 ldavg-15 blocked

11:06:26 PM 72 1030 65.90 41.52 34.75 0

[...]

This sar(1) output shows 0% CPU idle and an average run queue size of 72 (which includes both running and runnable) – more than the 36 CPUs available.

runqlat.bt

#!/usr/bin/env bpftrace

#include <linux/sched.h>

BEGIN

{

printf("Tracing CPU scheduler... Hit Ctrl-C to end.\n");

}

tracepoint:sched:sched_wakeup,

tracepoint:sched:sched_wakeup_new

{

@qtime[args->pid] = nsecs;

}

tracepoint:sched:sched_switch

{

if (args->prev_state == TASK_RUNNING) {

@qtime[args->prev_pid] = nsecs;

}

$ns = @qtime[args->next_pid];

if ($ns) {

@usecs = hist((nsecs - $ns) / 1000);

}

delete(@qtime[args->next_pid]);

}

END

{

clear(@qtime);

}

6.3.4 runqlen

runqlen is a tool to sample the length of the CPU run queues, counting how many tasks are waiting their turn.

I’d describe run queue length as a secondary performance metric, and run queue latency as primary.

This timed sampling has negligible overhead compared to runqlat’s scheduler tracing. For 24x7 monitoring, it may be preferable to use runqlen first to identify issues (since it is cheaper to run), and then use runqlat ad hoc to quantify the latency.

This per-CPU output (with -C option) is useful for checking scheduler balance.

# runqlen-bpfcc -TC 1

Sampling run queue length... Hit Ctrl-C to end.

02:55:30

cpu = 2

runqlen : count distribution

0 : 99 |****************************************|

cpu = 4

runqlen : count distribution

0 : 99 |****************************************|

cpu = 3

runqlen : count distribution

0 : 99 |****************************************|

cpu = 1

runqlen : count distribution

0 : 99 |****************************************|

cpu = 5

runqlen : count distribution

0 : 99 |****************************************|

cpu = 6

runqlen : count distribution

0 : 99 |****************************************|

cpu = 7

runqlen : count distribution

0 : 99 |****************************************|

cpu = 0

runqlen : count distribution

0 : 99 |****************************************|

Run queue occupancy (-O) is a separate metric that shows the percentage of time

that there were threads waiting.

# runqlen-bpfcc -TOC 1

Sampling run queue length... Hit Ctrl-C to end.

02:58:00

runqocc, CPU 0 0.00%

runqocc, CPU 1 0.00%

runqocc, CPU 2 0.00%

runqocc, CPU 3 0.00%

runqocc, CPU 4 0.00%

runqocc, CPU 5 0.00%

runqocc, CPU 6 0.00%

runqocc, CPU 7 0.00%

runqlen.bt

#!/usr/bin/env bpftrace

#include <linux/sched.h>

// Until BTF is available, we'll need to declare some of this struct manually,

// since it isn't available to be #included. This will need maintenance to match

// your kernel version. It is from kernel/sched/sched.h:

struct cfs_rq_partial {

struct load_weight load;

unsigned long runnable_weight;

unsigned int nr_running;

unsigned int h_nr_running;

};

BEGIN

{

printf("Sampling run queue length at 99 Hertz... Hit Ctrl-C to end.\n");

}

profile:hz:99

{

$task = (struct task_struct *)curtask;

$my_q = (struct cfs_rq_partial *)$task->se.cfs_rq;

$len = $my_q->nr_running;

$len = $len > 0 ? $len - 1 : 0; // subtract currently running task

@runqlen = lhist($len, 0, 100, 1);

}

This bpftrace version can break down by CPU, by adding a cpu key to @runqlen.

6.3.5 runqslower

runqslower lists instances of run queue latency exceeding a threshold (default is 10ms).

# runqslower-bpfcc

Tracing run queue latency higher than 10000 us

TIME COMM PID LAT(us)

03:05:41 b'kworker/6:1' 70686 12046

03:05:41 b'systemd' 1 12603

[...]

This currently works by using kprobes for the kernel functions ttwu_do_wakeup(), wake_up_new_task(), and finish_task_switch().

A future version should switch to scheduler tracepoints, using code similar to the earlier bpftrace version of runqlat.

The overhead is similar to that of runqlat; it can cause noticeable overhead on busy systems due to the cost of the kprobes, even while runqslower is not printing any output.

6.3.6 cpudist

cpudist show distribution of on-CPU time for each thread wakeup. This can be used to help characterize CPU workloads, providing details for later tuning and design decisions.

# cpudist -m

Tracing on-CPU time... Hit Ctrl-C to end.

^C

msecs : count distribution

0 -> 1 : 521 |****************************************|

2 -> 3 : 60 |*** |

4 -> 7 : 272 |******************** |

8 -> 15 : 308 |*********************** |

16 -> 31 : 66 |***** |

32 -> 63 : 14 |* |

The mode of on-CPU durations from 4 to 15 milliseconds is likely threads exhausting their scheduler time quanta and then encountering an involuntary context switch.

This works by tracing scheduler context switch events, which can be very frequent on busy production workloads (>1M events/sec). As with runqlat, the overhead of this tool could be significant.

6.3.7 cpufreq

cpufreq samples the CPU frequency and shows it as a system-wide histogram, with per-process name histograms.

The performance overhead should be low to negligible.

cpufreq.bt

#!/usr/bin/bpftrace

BEGIN

{

printf("Sampling CPU freq system-wide & by process. Ctrl-C to end.\n");

}

tracepoint:power:cpu_frequency

{

@curfreq[cpu] = args->state;

}

profile:hz:100

/@curfreq[cpu]/

{

@system_mhz = lhist(@curfreq[cpu] / 1000, 0, 5000, 200);

if (pid) {

@process_mhz[comm] = lhist(@curfreq[cpu] / 1000, 0, 5000, 200);

}

}

END

{

clear(@curfreq);

}

The frequency changes are traced using the power:cpu_frequency tracepoint, and saved in a @curfreq BPF map by CPU, for later lookup while sampling. The histograms track frequencies from 0 to 5000 MHz in steps of 200 MHz; these parameters can be adjusted in the tool if needed.

6.3.8 profile

profile samples stack traces at a timed interval, and emits a frequency count of stack traces. This is the most useful tool for understanding CPU consumption as it summarizes almost all code paths that are consuming CPU resources (see hardirq for more CPU consumers).

It can also be used with relatively negligible overhead, as the event rate is fixed to the sample rate, which can be tuned.

By default, it samples both user and kernel stack traces at 49 Hertz across all CPUs. This can be customized using options.

CPU Flame Graphs

Flame graphs are a visualization of stack traces, and can help you quickly understand

profile output.

# profile -af 30 > out.stacks01

$ git clone https://github.com/brendangregg/FlameGraph

$ cd FlameGraph

$ ./flamegraph.pl --color=java < ../out.stacks01 > out.svg

The hottest code paths are the widest towers.

profile is different from other CPU profilers, it calculates frequency count in kernel space for efficiency. Other kernel-based profilers, such as perf, emit each sampled stack trace to user space, where it is post-processed into a summary. This can be CPU expensive and, depending on the invocation, it can also involve file system and disk I/O to record the samples. profile avoids those expenses.

The core functionality can be implemented as a bpftrace one-liner:

bpftrace -e 'profile:hz:49 /pid/ { @samples[ustack, kstack, comm] = count(); }'

6.3.9 offcputime

offcputime is used to summarize time spent by threads blocked and off-CPU, showing stack traces to explain why. For CPU analysis, this explains why threads are not running on CPU. It’s a counterpart to profile: between them, they show the entire time spent by threads on the system, both on-CPU with profile and off-CPU with offcputime.

offcputime has been used to find various production issues, including finding unexpected time blocked in lock acquisition and the stack traces responsible.

offcputime works by instrumenting context switches and recording the time from when a thread leaves the CPU to when it returns, along with the stack trace. The times and stack traces are frequency-counted in kernel context for efficiency. Context switch events can nonetheless be very frequent, and the overhead of this tool can become significant (say, >10%) for busy production workloads.

Off-CPU Time Flame Graphs

# offcputime -fKu 5 > out.offcputime01.txt

$ flamegraph.pl --hash --bgcolors=blue -- title="Off-CPU Time Flame Graph" \

< out.offcputime01.txt > out.offcputime01.svg

By filtering to only record one PID or stack type can help reduce overhead.

offcputime.bt

#!/usr/bin/bpftrace

#include <linux/sched.h>

BEGIN

{

printf("Tracing nanosecond time in off-CPU stacks. Hit Ctrl-C to end.\n");

}

kprobe:finish_task_switch

{

// record previous thread sleep time

$prev = (struct task_struct *)arg0;

if ($1 == 0 || $prev->tgid == $1) {

@start[$prev->pid] = nsecs;

}

// get the current thread start time

$last = @start[tid];

if ($last != 0) {

@[kstack, ustack, comm] = sum(nsecs - $last);

delete(@start[tid]);

}

}

END

{

clear(@start);

}

6.3.10 syscount

syscount is a tool to count system calls system-wide.

It can be a starting point for investigating cases of high system CPU time.

sudo apt install auditd -y

# syscount-bpfcc -i 1

Tracing syscalls, printing top 10... Ctrl+C to quit.

[14:21:04]

SYSCALL COUNT

pselect6 57

read 53

bpf 13

rt_sigprocmask 8

write 5

ioctl 3

gettid 3

epoll_pwait 2

getpid 2

timerfd_settime 1

The -P option can be used to count by process ID instead:

# syscount-bpfcc -Pi 1

Tracing syscalls, printing top 10... Ctrl+C to quit.

[14:22:06]

PID COMM COUNT

1 systemd 349

741 systemd-journal 109

2544 agent-proxy 102

809 systemd-logind 32

774 dbus-daemon 29

802 rsyslogd 21

283137 syscount-bpfcc 19

282033 sshd 18

282989 auditd 6

782 NetworkManager 2

The overhead of this tool may become noticeable for very high syscall rates.

Similar bpftrace one-liner:

# bpftrace -e 't:syscalls:sys_enter_* { @[probe] = count(); }'

Attaching 286 probes...

^C

[...]

@[tracepoint:syscalls:sys_enter_recvmsg]: 615

@[tracepoint:syscalls:sys_enter_read]: 629

@[tracepoint:syscalls:sys_enter_faccessat]: 787

@[tracepoint:syscalls:sys_enter_close]: 836

@[tracepoint:syscalls:sys_enter_gettid]: 906

@[tracepoint:syscalls:sys_enter_ioctl]: 1327

@[tracepoint:syscalls:sys_enter_epoll_pwait]: 2179

This currently involves a delay during program startup and shutdown to instrument all these tracepoints. It’s preferable to use the single raw_syscalls:sys_enter tracepoint, as BCC does.

6.3.11 argdist & trace

If a syscall was found to be called frequently, we can use these tools to examine it in more detail.

For the tracepoint, we will need to find the argument names, which the BCC tool tplist prints out with the -v option:

# tplist -v syscalls:sys_enter_read

syscalls:sys_enter_read

int __syscall_nr;

unsigned int fd;

char * buf;

size_t count;

# argdist -H 't:syscalls:sys_enter_read():int:args->count'

# bpftrace -e 't:syscalls:sys_enter_read { @ = hist(args->count); }'

# argdist -H 't:syscalls:sys_exit_read():int:args->ret'

# bpftrace -e 't:syscalls:sys_exit_read { @ = hist(args->ret); }'

Examples:

# argdist-bpfcc -H 't:syscalls:sys_enter_read():int:args->count'

[14:51:01]

args->count : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 1 | |

16 -> 31 : 0 | |

32 -> 63 : 0 | |

64 -> 127 : 0 | |

128 -> 255 : 0 | |

256 -> 511 : 0 | |

512 -> 1023 : 0 | |

1024 -> 2047 : 0 | |

2048 -> 4095 : 0 | |

4096 -> 8191 : 0 | |

8192 -> 16383 : 50 |****************************************|

16384 -> 32767 : 1 | |

# bpftrace -e 't:syscalls:sys_enter_read { @ = hist(args->count); }'

Attaching 1 probe...

^C

@:

[8, 16) 1 | |

[16, 32) 0 | |

[32, 64) 0 | |

[64, 128) 0 | |

[128, 256) 0 | |

[256, 512) 0 | |

[512, 1K) 0 | |

[1K, 2K) 0 | |

[2K, 4K) 0 | |

[4K, 8K) 0 | |

[8K, 16K) 279 |@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@|

[16K, 32K) 3 | |

# bpftrace -e 't:syscalls:sys_exit_read { @ = hist(args->ret); }'

Attaching 1 probe...

^C

@:

(..., 0) 3 | |

[0] 38 |@@ |

[1] 700 |@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@|

[2, 4) 4 | |

[4, 8) 6 | |

[8, 16) 17 |@ |

[16, 32) 6 | |

[32, 64) 18 |@ |

[64, 128) 2 | |

[128, 256) 4 | |

[256, 512) 5 | |

[512, 1K) 12 | |

[1K, 2K) 1 | |

[2K, 4K) 2 | |

bpftrace has a separate bucket for negative values (“(…, 0)”), which are error codes returned by read to indicate an error. We can craft a bpftrace one-liner to print these as a frequency count or a linear histogram, so that their individual numbers can be seen:

# bpftrace -e 't:syscalls:sys_exit_read /args->ret < 0/ { @ = lhist(- args->ret, 0, 100, 1); }'

6.3.12 funccount

funccount is a tool that can frequency-count functions and other events. It can be used to provide more context for software CPU usage, by showing which functions are called and how frequently. profile may show that a function is hot on-CPU, but it can’t explain why: whether the function is slow, or whether it was simply called millions of times per second.

# funccount-bpfcc 'vfs_*'

Tracing 67 functions for "b'vfs_*'"... Hit Ctrl-C to end.

^C

FUNC COUNT

b'vfs_setxattr' 1

b'vfs_symlink' 1

b'vfs_removexattr' 1

b'vfs_unlink' 2

b'vfs_statfs' 2

b'vfs_rename' 2

b'vfs_readlink' 3

b'vfs_rmdir' 4

b'vfs_mkdir' 4

b'vfs_write' 34

b'vfs_lock_file' 68

b'vfs_statx' 84

b'vfs_statx_fd' 87

b'vfs_getattr' 165

b'vfs_getattr_nosec' 165

b'vfs_open' 178

b'vfs_read' 851

Detaching...

# funccount-bpfcc -Ti 5 'vfs_*'

The overhead of this tool is relative to the rate of the functions. Some functions, such as malloc() and get_page_from_freelist(), tend to occur frequently, so tracing them can slow down the target application significantly, in excess of 10%.

# bpftrace -e 'k:vfs_* { @[probe] = count(); }'

# bpftrace -e 'k:vfs_* { @[probe] = count(); } interval:s:1 { print(@); clear(@); }'

6.3.13 softirqs

softirqs is a tool that shows the time spent servicing soft IRQs (soft interrupts).

softirqs can show time per soft IRQ, rather than event count as shown in mpstat

or /proc/softirqs.

$ cat /proc/softirqs

CPU0 CPU1 CPU2 CPU3 CPU4 CPU5 CPU6 CPU7

HI: 12 0 0 0 0 0 0 0

TIMER: 1735349 2682998 1224456 885852 1325406 1300100 844205 796172

NET_TX: 9 32932 8 34075 2 1 1 2

NET_RX: 387770 30291 53536 69207 23892 32315 18903 8850

BLOCK: 103720 17802 14277 8427 33687 18968 17084 7898

IRQ_POLL: 0 0 0 0 0 0 0 0

TASKLET: 986086 401 601 505 129 116 148 88

SCHED: 1371691 2456003 917788 552230 487432 842298 443486 409531

HRTIMER: 0 0 0 0 0 0 0 0

RCU: 976423 786287 703830 621370 660607 806616 608495 556455

It works by using the irq:softirq_enter and irq:softirq_exit tracepoints.

The overhead of this tool is relative to the event rate.

# softirqs-bpfcc 10 1

Tracing soft irq event time... Hit Ctrl-C to end.

SOFTIRQ TOTAL_usecs

net_tx 36

block 222

net_rx 1144

rcu 3468

sched 5401

timer 6496

tasklet 24426

hi 45192

The -d option can be used to explore the distribution, and identify if there are latency outliers while servicing these interrupts.

# softirqs-bpfcc 10 1 -d

Tracing soft irq event time... Hit Ctrl-C to end.

softirq = net_tx

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 1 |****************************************|

32 -> 63 : 1 |****************************************|

softirq = timer

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 8 |*** |

8 -> 15 : 50 |************************ |

16 -> 31 : 82 |****************************************|

32 -> 63 : 65 |******************************* |

64 -> 127 : 1 | |

softirq = hi

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 291 |*********** |

16 -> 31 : 0 | |

32 -> 63 : 1052 |****************************************|

softirq = rcu

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 9 |******** |

8 -> 15 : 42 |****************************************|

16 -> 31 : 39 |************************************* |

32 -> 63 : 8 |******* |

64 -> 127 : 3 |** |

128 -> 255 : 2 |* |

softirq = sched

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 1 | |

4 -> 7 : 13 |***** |

8 -> 15 : 31 |************* |

16 -> 31 : 89 |****************************************|

32 -> 63 : 43 |******************* |

64 -> 127 : 3 |* |

softirq = net_rx

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 1 |************* |

32 -> 63 : 3 |****************************************|

64 -> 127 : 2 |************************** |

128 -> 255 : 2 |************************** |

softirq = block

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 0 | |

16 -> 31 : 1 |********** |

32 -> 63 : 4 |****************************************|

softirq = tasklet

usecs : count distribution

0 -> 1 : 0 | |

2 -> 3 : 0 | |

4 -> 7 : 0 | |

8 -> 15 : 2 | |

16 -> 31 : 121 |************ |

32 -> 63 : 383 |****************************************|

64 -> 127 : 5 | |

6.3.14 hardirqs

hardirqs is a BCC tool that shows time spent servicing hard IRQs (hard interrupts).

# hardirqs 10 1

This currently works by using dynamic tracing of the handle_irq_event_percpu() kernel function, although a future version should switch to the irq:irq_handler_entry and irq:irq_handler_exit tracepoints.

6.3.15 Other Tools

- cpuwalk from bpftrace samples which processes CPUs were running on, printing the result as a linear histogram. This provides a histogram view of CPU balance.

- cpuunclaimed from BCC is an experimental tool that samples CPU run queue lengths and determines how often there are idle CPUs, yet threads in a runnable state on a different run queue. This sometimes happens due to CPU affinity, but if it happens often it may be a sign of a scheduler mis-configuration or bug.

- loads from bpftrace is an example of fetching the load averages from a BPF tool. As discussed earlier, these numbers are misleading.

- vltrace is a tool in development by Intel that will be a BPF-powered version of strace, and can be used for further characterization of syscalls that are consuming CPU time.

6.4 BPF ONE-LINERS

New processes with arguments:

execsnoop

bpftrace -e 'tracepoint:syscalls:sys_enter_execve { join(args->argv); }'

Show who is executing what:

trace 't:syscalls:sys_enter_execve "-> %s", args->filename'

bpftrace -e 'tracepoint:syscalls:sys_enter_execve { printf("%s -> %s\n", comm, str(args->filename)); }'

Syscall count by program:

bpftrace -e 'tracepoint:raw_syscalls:sys_enter { @[comm] = count(); }'

Syscall count by process:

syscount -P

bpftrace -e 'tracepoint:raw_syscalls:sys_enter { @[pid, comm] = count(); }'

Syscall count by syscall name:

syscount

Syscall count by syscall probe name:

bpftrace -e 'tracepoint:syscalls:sys_enter_* { @[probe] = count(); }'

Sample running process name at 99 Hertz:

bpftrace -e 'profile:hz:99 { @[comm] = count(); }'

Sample user-level stacks at 49 Hertz, for PID 189:

profile -F 49 -U -p 189

bpftrace -e 'profile:hz:49 /pid == 189/ { @[ustack] = count(); }'

Sample all stack traces and process names:

profile

bpftrace -e 'profile:hz:49 { @[ustack, kstack, comm] = count(); }'

Sample running CPU at 99 Hertz and show as a linear histogram:

bpftrace -e 'profile:hz:99 { @cpu = lhist(cpu, 0, 256, 1); }'

Count kernel functions beginning with ‘vfs_’:

funccount 'vfs_*'

bpftrace -e 'kprobe:vfs_* { @[func] = count(); }'

Count SMP calls by name and kernel stack:

bpftrace -e 'kprobe:smp_call* { @[probe, kstack(5)] = count(); }'

6.5 OPTIONAL EXERCISES

- Use execsnoop to show the new processes for the “man ls” command.

- Run execsnoop with -t and output to a log file for ten minutes on a production or local system; what new processes did you find?

- Create an overloaded CPU. This creates two CPU-bound threads that are bound

to CPU 0:

taskset -c 0 sh -c 'while :; do :; done' & taskset -c 0 sh -c 'while :; do :; done' &Now use

uptime(load averages),mpstat(-P ALL),runqlen, andrunqlatto characterize the workload on CPU 0. - Develop a tool/one-liner to sample kernel stacks on CPU 0 only.

- Use profile to capture kernel CPU stacks to determine where CPU time is spent

by the following workload:

dd if=/dev/nvme0n1p3 bs=8k iflag=direct | dd of=/dev/null bs=1Modify the infile (if=) device to be a local disk. You can either profile system-wide, or filter for each of those dd processes.

- Generate a CPU flame graph of the (5) output.

- Use offcputime to capture kernel CPU stacks to determine where blocked time is spent for the (5) workload.

- Generate an off-CPU time flame graph for the (7) output.

- execsnoop only sees new processes that call exec (execve), although some may fork or clone and not exec (e.g., the creation of worker processes). Write a new tool called procsnoop to show all new processes with as many details as possible. You could trace fork and clone, or use the sched tracepoints, or something else.

- Develop a bpftrace version of softirqs that prints the softirq name.

- Implement cpudist in bpftrace.

- With cpudist (either version), show separate histograms for voluntary and involuntary context switches.

- (Advanced, unsolved) Develop a tool to show a histogram of time spent by tasks in CPU affinity wait: runnable while other CPUs are idle, but not migrated due to cache warmth (see kernel.sched_migration_cost_ns, task_hot() (which may be inlined and not tracable), and can_migrate_task()).

Chapter 7. Memory

Learning objectives:

- Understand memory allocation and paging behavior.

- Learn a strategy for successful analysis of memory behavior using tracers.

- Use traditional tools to understand memory capacity usage.

- Use BPF tools to identify code paths causing heap and RSS growth.

- Characterize page faults by filename and stack trace.

- Analyze the behavior of the VM scanner.

- Determine the performance impact of memory reclaim.

- Identify which processes are waiting for swap-ins.

- Use bpftrace one-liners to explore memory usage in custom ways.

7.1 BACKGROUND

7.1.1 Memory Allocators

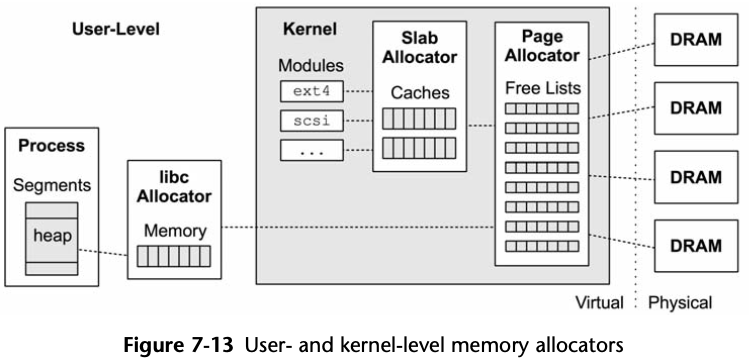

Figure 7-1 Memory Allocators

Figure 7-1 Memory Allocators

For processes using libc for memory allocation, memory is stored on a dynamic segment of the process’ virtual address space called the heap.

libc needs to extend the size of the heap only when there is no available memory.

For efficiency, memory mappings are created in groups of memory called pages.

The kernel can service physical memory page requests from its own free lists, which it maintains for each DRAM group and CPU for efficiency. The kernel’s own software also consumes memory from these free lists as well, usually via a kernel allocator such as the slab allocator.

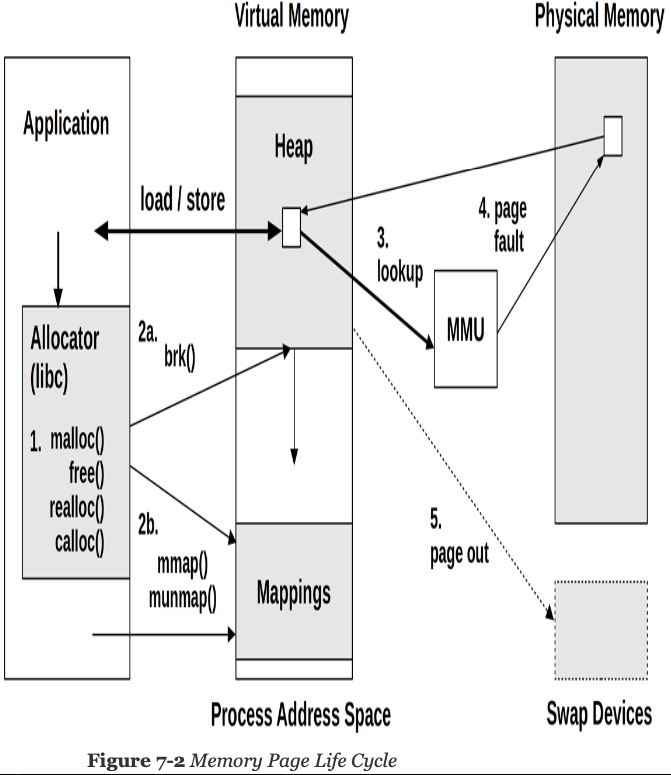

7.1.2 Memory Pages and Swapping

The amount of physical memory in use by the process is called its resident set size (RSS).

kswapd will free one of three types of memory:

a) File system pages that were read from disk and not modified (termed “backed by

disk”). These pages are application-executable text, data, and file system metadata.

b) File system pages that have been modified.

c) Pages of application memory. These are called anonymous memory, because they have no file origin. If swap devices are in use, these can be freed by first storing them on a swap device. This writing of pages to a swap device is termed swapping.

User-level allocations can occur millions of times per second for a busy application. Load and store instructions and MMU lookups are even more frequent, and can occur billions of times per second.

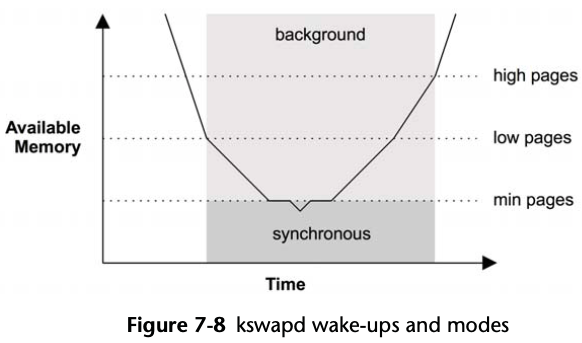

7.1.3 Page-Out Daemon(kswapd)

It is woken up when free memory crosses a low threshold, and goes back to sleep when it crosses a high threshold, as shown below.

Figure 7-3 kswapd wake-ups and modes

Figure 7-3 kswapd wake-ups and modes

Direct reclaim is a foreground mode of freeing memory to satisfy allocations. In this mode, allocations block (stall) and synchronously wait for pages to be freed.

Direct reclaim can call kernel module shrinker functions: these free up memory that may have been kept in caches, including the kernel slab caches.

7.1.4 Swap Devices

Swap devices provide a degraded mode of operation for a system running out of memory: processes can continue to allocate, but less frequently-used pages are now moved to and from their swap devices, which usually causes applications to run much more slowly.

Some production systems run without swap.

7.1.5 OOM Killer

The heuristic looks for the largest victim that will free many pages, and which isn’t a critical task such as kernel threads or init (PID 1). Linux provides ways to tune the behavior of the OOM killer system-wide and per-process.

7.1.6 Page Compaction

7.1.7 File System Caching and Buffering

Linux can be tuned to prefer freeing from the file system cache, or freeing memory via swapping (vm.swappiness).

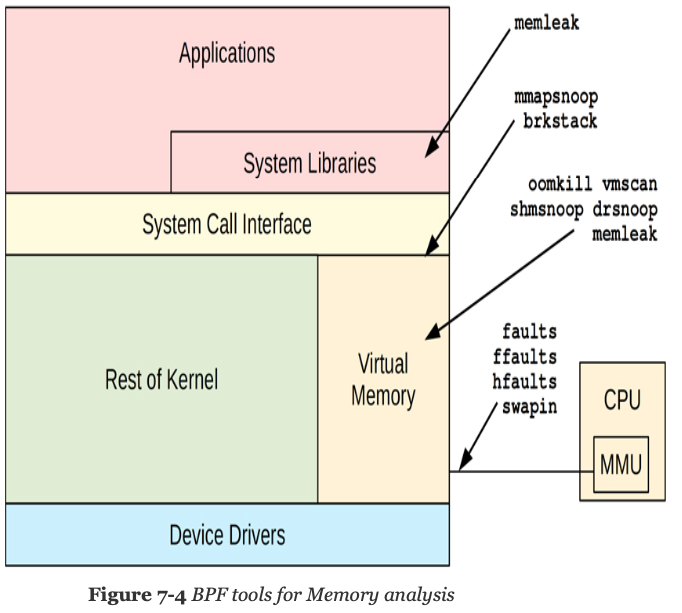

7.1.8 BPF Capabilities

BPF tracing tools can provide additional insight for memory activity, answering:

- Why does the process physical memory (RSS) keep growing?

- What code paths are causing page faults? For which files?

- What processes are blocked waiting on swap-ins?

- What memory mappings are being created system-wide?

- What is the system state at the time of an OOM kill?

- What application code paths are allocating memory?

- What types of objects are allocated by applications?

- Are there memory allocations that are not freed after a while? (potential leaks)?

Event Sources

| Event Type | Event Source |

|---|---|

| User memory allocations | uprobe on allocator functions, libc USDT probes |

| Kernel memory allcations | kprobes on allocator functions, kmem tracepoints |

| Heap expansions | brk syscall tracepoint |

| Share memory functions | syscall tracepoints |

| Page faults | kprobes, software events, exception tracepoints |

| Page migrations | migration tracepoints |

| Page compaction | compaction tracepoints |

| VM scanner | vmscan tracepoints |

| Memory access cycles | PMCs |

Overhead

Memory allocation events can occur millions of times per second, calling them

millions of times per second can add up to significant overhead, slowing the target

software by over 10%, and in some cases by ten times (10x),

depending on the rate of events traced and the BPF program used.

Many questions about memory usage can be answered, or approximated, by tracing the infrequent events: page faults, page outs, brk() calls, and mmap() calls. The overhead of tracing these can be negligible.

One reason to trace the malloc() calls is to show the code paths that led to malloc(). These code paths can be revealed using a different technique: timed sampling of CPU stacks, as covered in the previous chapter. Searching for “malloc” in a CPU flame graph is a coarse but cheap way to identify the code paths calling this function frequently.

The performance of uprobes may be greatly improved in the future (10-100x) through dynamic libraries involving user- to user-space jumps, rather than kernel traps.

7.1.9 Strategy

- Check kernel messages to see if the OOM killer has recently killed processes

- Check if the system has swap devices and the amount of swap in use, and if those devices have active I/O (eg, using swap, iostat, vmstat).

- Check the amount of free memory on the system, and system-wide usage by caches (eg, free).

- Check per-process memory usage (eg, using top, ps).

- Check the page fault rate, and examine stack traces on page faults: this can explain RSS growth.

- Check the files that were backing page faults.

- Trace brk() and mmap() calls for a different view of memory usage.

- Browse and execute the BPF tools listed in the BPF tools section of this book.

- Measure hardware cache misses and memory accesses using PMCs (especially with PEBS enabled) to determine functions and instructions causing memory I/O (e.g., using perf).

7.2 TRADITIONAL TOOLS

Table of traditional tools

| Tool | Type | Description |

|---|---|---|

| dmesg | Kernel Log | OOM killer event details |

| swapon | Kernel Statistics | Swap device usage |

| free | Kernel Statistics | System-wide memory usage |

| ps | Kernel Statistics | Process statistics including memory usage |

| pmap | Kernel Statistics | Process memory usage by segment |

| vmstat | Kernel Statistics | Various statistics including memory |

| sar | Kernel Statistics | Can show page fault and page scanner rates |

| perf | Software Events, Hardware Statistics, Hardware Sampling | Memory-related PMC statistics and event sampling |

7.2.1 Kernel Log

OOM

7.2.2 Kernel Statistics

swapon

$ swapon

NAME TYPE SIZE USED PRIO

/dev/sda3 partition 977M 766.2M -2

free

free -w

It includes an “available” column, showing how much memory is available for use, including the file system cache.

ps

ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.3 0.5 167232 10412 ? Ss Aug24 33:44 /sbin/init

root 2 0.0 0.0 0 0 ? S Aug24 0:02 [kthreadd]

root 3 0.0 0.0 0 0 ? I< Aug24 0:00 [rcu_gp]

root 4 0.0 0.0 0 0 ? I< Aug24 0:00 [rcu_par_gp]

root 8 0.0 0.0 0 0 ? I< Aug24 0:00 [mm_percpu_wq]

root 9 0.0 0.0 0 0 ? S Aug24 0:11 [ksoftirqd/0]

- %MEM: The percentage of the system’s physical memory in use by this process.

- VSZ: Virtual memory size.

- RSS: Resident set size: total physical memory in use by this process.

# ps -eo pid,vsz,rss,name

PID VSZ RSS NAME

1 11984 2560 init

[...]

131 10888 3196 logd

132 5892 3168 servicemanager

133 8468 4916 hwservicemanager

134 5892 3124 vndservicemanager

136 8312 4720 android.hardware.keymaster@3.0-service

138 15156 5540 vold

153 24932 5608 netd

154 1028440 124220 zygote

157 6684 3232 android.hidl.allocator@1.0-service

158 7008 3476 healthd

160 15068 8056 android.hardware.audio@2.0-service

161 6804 3456 android.hardware.bluetooth@1.0-service

162 7584 4112 android.hardware.cas@1.0-service

164 8120 3540 android.hardware.configstore@1.1-service

165 6024 3436 android.hardware.graphics.allocator@2.0-service

166 12324 5476 android.hardware.graphics.composer@2.1-service

167 6716 3252 android.hardware.light@2.0-service.rpi3

169 6876 3364 android.hardware.memtrack@1.0-service

170 5884 3300 android.hardware.power@1.0-service

171 7816 3360 android.hardware.usb@1.0-service

172 7772 5200 android.hardware.wifi@1.0-service

173 43152 18432 audioserver

174 4856 1984 lmkd

176 63772 23744 surfaceflinger

177 8316 3944 thermalserviced

181 4488 2448 sh

182 3908 1032 adbd

187 5056 2384 su

188 24596 11268 cameraserver

189 18092 9756 drmserver

190 9528 4132 incidentd

191 13248 4284 installd

192 11836 6100 keystore

193 9612 5012 mediadrmserver

194 47672 13164 media.extractor

195 17880 9344 media.metrics

197 57552 14464 mediaserver

198 10760 4444 storaged

199 9312 4512 wificond

200 34472 11012 media.codec

201 10160 5220 gatekeeperd

202 8820 3352 perfprofd

203 4652 2344 tombstoned

245 2228 972 mdnsd

263 7424 2456 su

267 5312 1640 su

269 5440 1396 su

270 4488 2444 sh

312 1250296 176172 system_server

444 0 0 [kworker/1:2-mm_percpu_wq]

457 4860 2404 iptables-restore

458 4864 2468 ip6tables-restore

488 0 0 [sdcard]

490 855608 90164 com.android.inputmethod.latin

503 1020396 189740 com.android.systemui

715 875148 94356 com.android.phone

730 933000 103092 com.android.settings

838 849196 79700 com.android.deskclock

868 843104 69808 com.android.se

873 846264 72444 org.lineageos.audiofx

902 843304 71776 com.android.printspooler

920 848376 87940 android.process.media

937 968040 162932 com.android.launcher3

943 841964 69544 com.android.keychain

998 850016 75352 org.lineageos.lineageparts

1027 843752 85916 android.process.acore

1033 847288 77236 com.android.calendar

1077 849404 74056 com.android.contacts

1095 842676 77784 com.android.providers.calendar

1117 843940 70268 com.android.managedprovisioning

1138 0 0 [kworker/u8:2-events_unbound]

1140 839700 68076 com.android.onetimeinitializer

1157 849100 71832 com.android.packageinstaller

1175 847160 76060 com.android.traceur

1196 843736 70612 org.lineageos.lockclock

1214 844616 77912 org.lineageos.updater

[...]

These statistics and more can be found in /proc/PID/status.

pmap

This view can identify large memory consumers by libraries or mapped files.

This extended (-x) output includes a column for “dirty” pages.

# pmap -x `pidof surfaceflinger`

176: /system/bin/surfaceflinger

Address Kbytes PSS Dirty Swap Mode Mapping

0c4e1000 28 28 0 0 r-x-- surfaceflinger

0c4e9000 4 4 4 0 r---- surfaceflinger

0c4ea000 4 4 4 0 rw--- surfaceflinger

e3534000 4592 0 0 0 rw-s- gralloc-buffer (deleted)

e3e40000 1504 0 0 0 rw-s- gralloc-buffer (deleted)

e3fb8000 1504 0 0 0 rw-s- gralloc-buffer (deleted)

e4130000 1504 0 0 0 rw-s- gralloc-buffer (deleted)

e42a8000 1504 750 0 0 rw-s- gralloc-buffer (deleted)

e4a00000 512 136 136 0 rw--- [anon:libc_malloc]

e4aa7000 4 0 0 0 ----- [anon]

e4aa8000 1012 8 8 0 rw--- [anon]

e4ba5000 4 0 0 0 ----- [anon]

e4ba6000 1012 8 8 0 rw--- [anon]

e4ca3000 4 0 0 0 ----- [anon]

e4ca4000 1012 8 8 0 rw--- [anon]

e4f05000 1504 750 0 0 rw-s- gralloc-buffer (deleted)

e507d000 1504 750 0 0 rw-s- gralloc-buffer (deleted)

e51f5000 4592 2112 2112 0 rw-s- gralloc-buffer (deleted)

e5671000 20 5 0 0 r-x-- android.hardware.graphics.allocator@2.0-impl.so

e5676000 4 4 4 0 r---- android.hardware.graphics.allocator@2.0-impl.so

e5677000 4 4 4 0 rw--- android.hardware.graphics.allocator@2.0-impl.so

[...]

-------- ------ ------ ------ ------

total 63772 10506 4900 0

vmstat

sar

The -B option shows page statistics:

# sar -B 1

Linux 4.15.0-1031-aws (...) 01/26/2019 _x86_64_ (48 CPU)

06:10:38 AM pgpgin/s pgpgout/s fault/s majflt/s pgfree/s pgscank/s pgscand/s pgsteal/s %vmeff

06:10:39 PM 0.00 0.00 286.00 0.00 16911.00 0.00 0.00 0.00 0.00

06:10:40 PM 0.00 0.00 90.00 0.00 19178.00 0.00 0.00 0.00 0.00

06:10:41 PM 0.00 0.00 187.00 0.00 18949.00 0.00 0.00 0.00 0.00

06:10:42 PM 0.00 0.00 110.00 0.00 24266.00 0.00 0.00 0.00 0.00

[...]

The page fault rate (“fault/s”) is low, less than 300 per second. There also isn’t any page scanning (the “pgscan” columns), indicating that the system is likely not running at memory saturation.

7.2.3 Hardware Statistics and Sampling

7.3 BPF TOOLS

Table of Memory-related tools

| Tools | Source | Target | Overhead | Description |

|---|---|---|---|---|

| oomkill | bcc/bt | OOM | negligible | Show extra info on OOM kill events |

| memleak | bcc | Sched | Show possible memory leak codepaths | |

| mmapsnoop | book | Syscalls | negligible | Trace mmap calls system wide |

| brkstack | book | Syscalls | negligible | Show brk() calls with user stack traces |

| shmsnoop | bcc | Syscalls | negligible | Trace shared memory calls with details |

| faults | book | Faults | Show page faults by user stack trace | |

| ffaults | book | Faults | noticeable* | Show page faults by filename |

| vmscan | book | VM | Measure VM scanner shrink and reclaim times | |

| drsnoop | bcc | VM | negligible | Trace direct reclaim events showing latency |

| swapin | book | VM | Show swap-ins by process | |

| hfaults | book | Faults | Show huge page faults by process |

7.3.1 oomkill

# oomkill

Tracing OOM kills... Ctrl-C to stop.

08:51:34 Triggered by PID 18601 ("perl"), OOM kill of PID 1165 ("java"), 18006224 pages,

loadavg: 10.66 7.17 5.06 2/755 18643

[...]

This tool can be enhanced to print other details as desired when debugging OOM events. There are also oom tracepoints that can reveal more details about how tasks are selected, which are not yet used by this tool.

This tool may also be useful to customize for investigations; for example, by adding other task_struct details at the time of the OOM, or other commands in the system() call.

oomkill.bt

#!/usr/bin/env bpftrace

#include <linux/oom.h>

BEGIN

{

printf("Tracing oom_kill_process()... Hit Ctrl-C to end.\n");

}

kprobe:oom_kill_process

{

$oc = (struct oom_control *)arg0;

time("%H:%M:%S ");

printf("Triggered by PID %d (\"%s\"), ", pid, comm);

printf("OOM kill of PID %d (\"%s\"), %d pages, loadavg: ",

$oc->chosen->pid, $oc->chosen->comm, $oc->totalpages);

cat("/proc/loadavg");

}

7.3.2 memleak

Without a -p PID provided, memleak will trace kernel allocations.

7.3.3 mmapsnoop

This tool works by instrumenting the syscalls:sys_enter_mmap tracepoint.

The overhead of this tool should be negligible as the rate of new mappings should be relatively low.

7.3.4 brkstack

# trace -U t:syscalls:sys_enter_brk

# stackcount -PU t:syscalls:sys_enter_brk

brk growths are infrequent, the overhead of tracing these is negligible, making this an inexpensive tool for finding some clues about memory growth.

The stack trace may reveal a large and unusual allocation that needed more space than was available, or a normal code path that happened to need one byte more than was available. The code path will need to be studied to determine which is the case.

brkstack.bt

#!/usr/bin/bpftrace

tracepoint:syscalls:sys_enter_brk

{

@[ustack, comm] = count();

}

7.3.5 shmsnoop

This can be used for debugging shared memory usage. For example, during startup of a Java application.

This tool works by tracing the shared memory syscalls, which should be infrequent enough that the overhead of the tool is negligible.

7.3.6 faults

These page faults cause RSS growth.

faults.bt

#!/usr/bin/bpftrace

software:page-faults:1

{

@[ustack, comm] = count();

}

7.3.7 ffaults

# ffaults.bt Attaching 1 probe...

[...]

@[cat]: 4576

@[make]: 7054

@[libbfd-2.26.1-system.so]: 8325

@[libtinfo.so.5.9]: 8484

@[libdl-2.23.so]: 9137

@[locale-archive]: 21137

@[cc1]: 23083

@[ld-2.23.so]: 27558

@[bash]: 45236

@[libopcodes-2.26.1-system.so]: 46369

@[libc-2.23.so]: 84814

@[]: 537925

This output shows that the most page faults were to regions without a file name – this would be process heaps – with 537,925 faults occurring during tracing. The libc library encountered 84,814 faults while tracing. This is happening because the software build is creating many short-lived processes, which are faulting in their new address spaces.

ffaults.bt

#!/usr/bin/bpftrace

#include <linux/mm.h>

kprobe:handle_mm_fault

{

$vma = (struct vm_area_struct *)arg0;

$file = $vma->vm_file->f_path.dentry->d_name.name;

@[str($file)] = count();

}

The rate of file faults varies: check using tools such as perf or sar. For high rates, the overhead of this tool may begin to become noticeable.

7.3.8 vmscan

- S-SLABms: Total time in shrink slab, in milliseconds. This is reclaiming memory from various kernel caches.

- D-RECLAIMms: Total time in direct reclaim, in milliseconds. This is foreground reclaim, which blocks memory allocations while memory is written to disk.

- M-RECLAIMms: Total time in memory cgroup reclaim, in milliseconds. If memory cgroups are in use, this shows when one cgroup has exceeded its limit and its own cgroup memory is reclaimed.

- KSWAPD: Number of kswapd wakeups.

- WRITEPAGE: Number of kswapd page writes.

Look out for time in direct reclaims (D-RECLAIMms): this type of reclaim is “bad” but necessary, and will cause performance issues. It can hopefully be eliminated by tuning the other vm sysctl tunables to engage background reclaim sooner.

vmscan.bt

#!/usr/bin/bpftrace

tracepoint:vmscan:mm_shrink_slab_start

{

@start_ss[tid] = nsecs;

}

tracepoint:vmscan:mm_shrink_slab_end

/@start_ss[tid]/

{

$dur_ss = nsecs - @start_ss[tid];

@sum_ss = @sum_ss + $dur_ss;

@shrink_slab_ns = hist($dur_ss);

delete(@start_ss[tid]);

}

tracepoint:vmscan:mm_vmscan_direct_reclaim_begin

{

@start_dr[tid] = nsecs;

}

tracepoint:vmscan:mm_vmscan_direct_reclaim_end

/@start_dr[tid]/

{

$dur_dr = nsecs - @start_dr[tid];

@sum_dr = @sum_dr + $dur_dr;

@direct_reclaim_ns = hist($dur_dr);

delete(@start_dr[tid]);

}

tracepoint:vmscan:mm_vmscan_memcg_reclaim_begin

{

@start_mr[tid] = nsecs;

}

tracepoint:vmscan:mm_vmscan_memcg_reclaim_end

/@start_mr[tid]/

{

$dur_mr = nsecs - @start_mr[tid];

@sum_mr = @sum_mr + $dur_mr;

@memcg_reclaim_ns = hist($dur_mr);

delete(@start_mr[tid]);

}

tracepoint:vmscan:mm_vmscan_wakeup_kswapd

{

@count_wk++;

}

tracepoint:vmscan:mm_vmscan_writepage

{

@count_wp++;

}

BEGIN

{

printf(" %-10s %10s %12s %12s %6s %9s\n", "TIME",

"S-SLABms", "D-RECLAIMms", "M-RECLAIMms", "KSWAPD", "WRITEPAGE");

}

interval:s:1

{

time("%H:%M:%S");

printf(" %10d %12d %12d %6d %9d\n",

@sum_ss / 1000000, @sum_dr / 1000000, @sum_mr / 1000000,

@count_wk, @count_wp);

clear(@sum_ss);

clear(@sum_dr);

clear(@sum_mr);

clear(@count_wk);

clear(@count_wp);

}

7.3.9 drsnoop

# drsnoop -T

TIME(s) COMM PID LAT(ms) PAGES

0.000000000 java 11266 1.72 57

0.004007000 java 11266 3.21 57

0.011856000 java 11266 2.02 43

0.018315000 java 11266 3.09 55

0.024647000 acpid 1209 6.46 73

[...]

The rate of these and their duration can be considered in quantifying the application impact.

These are expected to be low-frequency events (usually happening in bursts), so the overhead should be negligible.

7.3.10 swapin

Swap-ins occur when the application tries to use memory that has been moved to the swap device. This is an important measure of the performance pain suffered by the application due to swapping.

Other swap metrics, like scanning and swap-outs, may not directly affect application performance.

swapin.bt

#!/usr/bin/bpftrace

kprobe:swap_readpage

{

@[comm, pid] = count();

}

interval:s:1

{

time();

print(@);

clear(@);

}

7.3.11 hfaults

hfaults traces huge page faults by their process details, and can be used to

confirm that huge pages are in use.

hfaults.bt

#!/usr/bin/bpftrace

BEGIN

{

printf("Tracing Huge Page faults per process... Hit Ctrl-C to end.\n");

}

kprobe:hugetlb_fault

{

@[pid, comm] = count();

}

7.4 BPF ONE-LINERS

Count process heap expansion (brk()) by user-level stack trace:

stackcount -U t:syscalls:sys_enter_brk

bpftrace -e 'tracepoint:syscalls:sys_enter_brk { @[ustack, comm] = count(); }'

Count vmscan operations by tracepoint:

funccount 't:vmscan:*'

bpftrace -e 'tracepoint:vmscan:* { @[probe] = count(); }'

Show hugepages madvise() calls by process:

trace hugepage_madvise

bpftrace -e 'kprobe:hugepage_madvise { printf("%s by PID %d\n", probe, pid); }'

Count page migrations:

funccount t:migrate:mm_migrate_pages

bpftrace -e 'tracepoint:migrate:mm_migrate_pages { @ = count(); }'

Trace compaction events:

trace t:compaction:mm_compaction_begin

bpftrace -e 't:compaction:mm_compaction_begin { time(); }'

7.5 OPTIONAL EXERCISES

- Run vmscan for ten minutes on a production or local server. If any time was spent in direct reclaim (D-RECLAIMms), also run drsnoop to measure this on a per-event basis.

- Modify vmscan to print the header every 20 lines, so that it remains on screen.

- During application startup (either a production or desktop application) use fault to count page fault stack traces. This may involve fixing or finding an application that supports stack traces and symbols.

- Create a page fault flame graph from the output of (3).

- Develop a tool to trace process virtual memory growth via both brk and mmap.

- Develop a tool to print the size of expansions via brk. This may use syscall tracepoints, kprobes, or libc USDT probes as desired.

- Develop a tool to show the time spent in page compaction. You can use the compaction:mm_compaction_begin and compaction:mm_compaction_end tracepoints. Print the time per event, and summarize it as a histogram.

- Develop a tool to show time spent in shrink slab, broken down by slab name (or shrinker function name).

- Use memleak to find long-term survivors on some sample software in a test environment. Also estimate the performance overhead with and without memleak running.

- (Advanced, unsolved) Develop a tool to investigate swap thrashing: show the time spent by pages on the swap device as a histogram. This likely involves measuring the time from swap out to swap in.

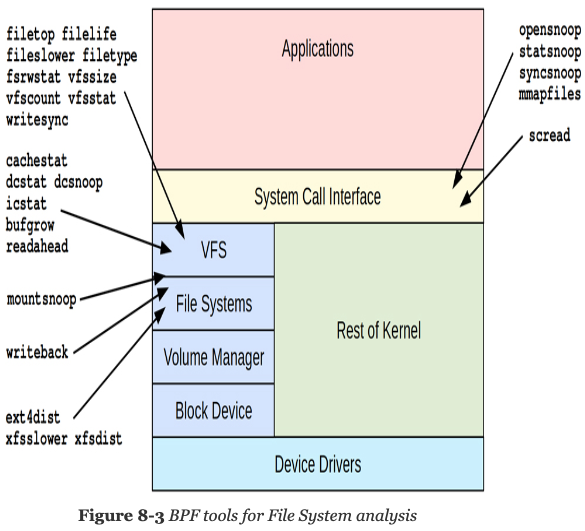

Chapter 8. File Systems

Learning objectives:

- Understand file system components: VFS, caches, write-back.

- Understand targets for file system analysis with BPF.

- Learn a strategy for successful analysis of file system performance.

- Characterize file system workloads by file, operation type, and by process.

- Measure latency distributions for file system operations, and identify bi-modal distributions and issues of latency outliers.

- Measure the latency of file system write-back events.

- Analyze page cache and read ahead performance.

- Observe directory and inode cache behavior.

- Use bpftrace one-liners to explore file system usage in custom ways.

8.1 BACKGROUND

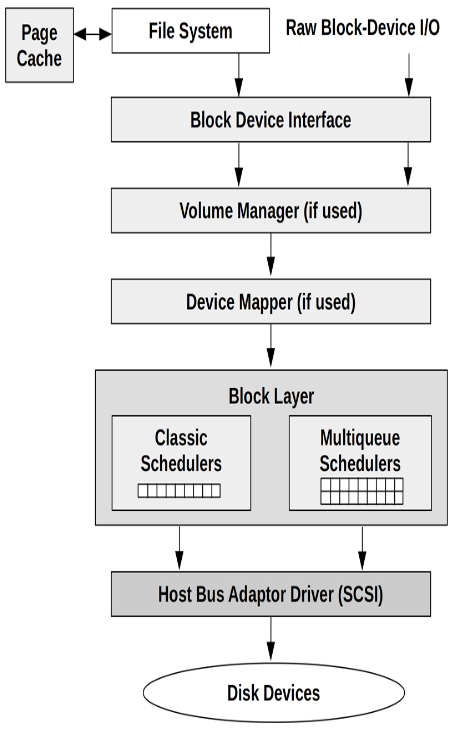

8.1.1 I/O Stack

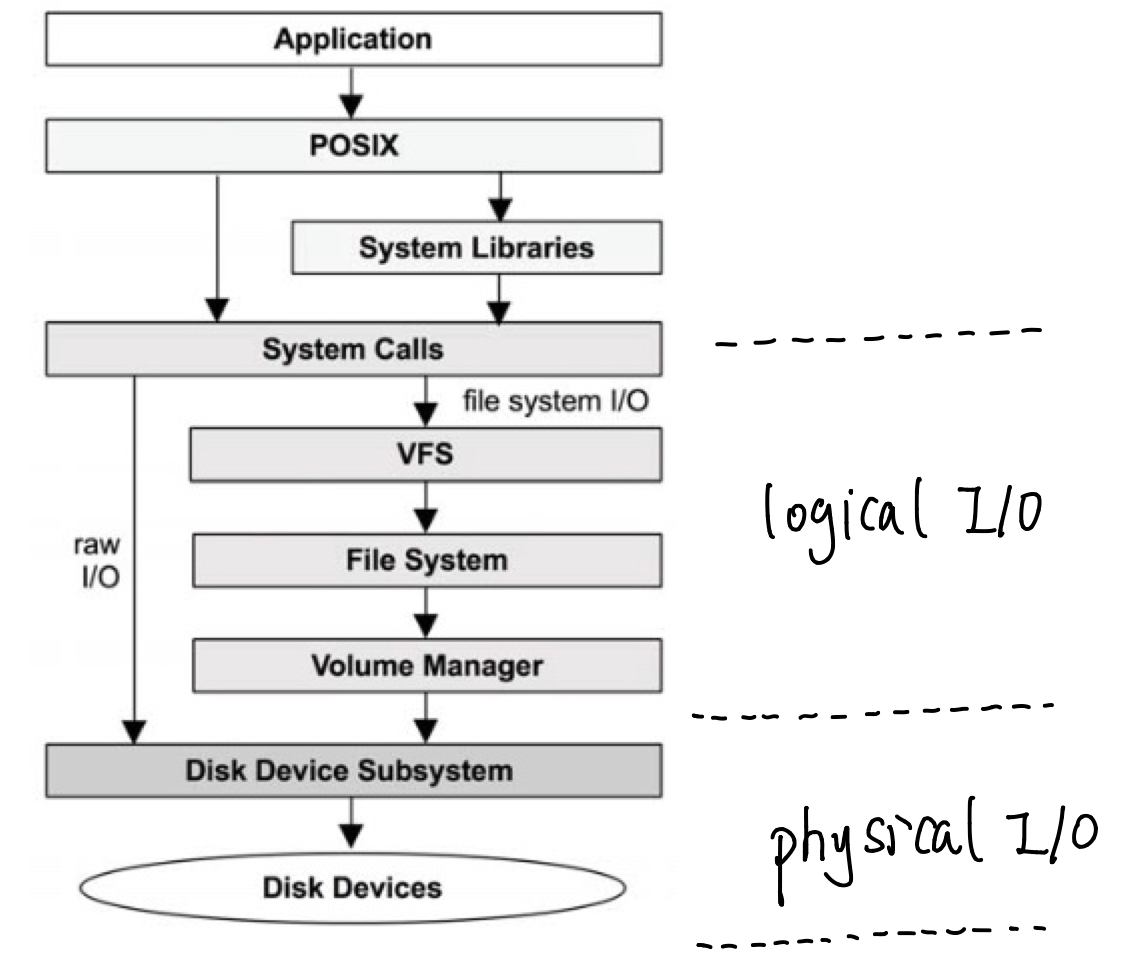

Figure 8-1 Generic I/O Stack

Figure 8-1 Generic I/O Stack

Logical I/O: describes requests to the file system.

Raw I/O: is rarely used nowadays: it is a way for applications to use disk devices with no file system.

8.1.2 File System Caches

Page cache: This contains virtual memory pages including the contents of files and I/O buffers (what was once a separate “buffer cache”), and improves the performance of file and directory I/O.

Inode cache: Inodes (index nodes) are data structures used by file systems to describe their stored objects. VFS has its own generic version of an inode, and Linux keeps a cache of these because they are frequently read for permission checks and other metadata.

Directory cache: caches mappings from directory entry names to VFS inodes, improving the performance of path name lookups.

8.1.3 Read Ahead

Read ahead improves read performance only for sequential access workloads.

8.1.4 Write Back

Buffers are dirtied in memory and flushed to disk sometime later by kernel worker threads, so as not to block applications directly on slow disk I/O.

8.1.5 BPF Capabilities

- What are the file system requests? Counts by type?

- What are the read sizes to the file system?

- How much write I/O was synchronous?

- What is the file workload access pattern: random or sequential?

- What files are accessed? By what process or code path? Bytes, I/O counts?

- What file system errors occurred? What type, and for whom?

- What is the source of file system latency? Is it disks, the code path, locks?

- What is the distribution of file system latency?

- What is the ratio of Dcache and Icache hits vs misses?

- What is the page cache hit ratio for reads?

- How effective is prefetch/read-ahead? Should this be tuned?

Event Sources

| I/O Type | Event Source |

|---|---|

| Application and library I/O | uprobes |

| System call I/O | syscalls tracepoints |

| File system I/O | ext4(…) tracepoints, kprobes |

| Cache hits (reads), write-back (writes) | kprobes |

| Cache misses (reads), write-through (writes) | kprobes |

| Page cache write-back | writeback tracepoints |

| Physical disk I/O | block tracepoints, kprobes |

| Raw I/O | kprobes |

ext4 provides over one hundred tracepoints.

Overhead

Logical I/O, especially reads and writes to the file system cache, can be very

frequent: over 100k events per second.

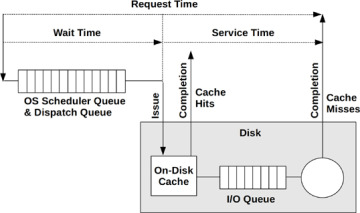

Physical disk I/O on most servers is typically so low (less than 1000 IOPS), that